Google Research and DeepMind have developed AMIE (Articulate Medical Intelligence Explorer), an AI system designed to assist in medical diagnostics through natural conversations. Initially focused on text-based interactions, AMIE now incorporates multimodal capabilities, enabling it to analyze medical images, such as radiographs and skin photos, thanks to integration with the Gemini model.

In a randomized, blinded virtual Objective Structured Clinical Examination (OSCE) study involving 105 cases, the performance of AMIE was compared to that of primary care physicians (PCPs). The study assessed various aspects, including diagnostic accuracy, image interpretation, management reasoning, communication skills, and empathy. Specialist physicians and patient actors evaluated the consultations.

Key Findings:

While AMIE remains a research prototype, these findings highlight its potential to augment clinical decision-making, especially in settings with limited access to specialists. Further research and real-world evaluations are necessary before AMIE can be integrated into clinical practice.

research.google / nature.com / arxiv.org

With increasingly demanding consumers, developing a successful product requires more than just knowing about the product itself. You need to understand business, people, technology, design, and… a little bit of everything.

Explore the top free and paid AI tools for 2025, including the best AI chatbots, image and video generators, research assistants, and productivity apps. Compare features, pricing, and top picks for creative and professional use.

"Vibe Coding" is a form of programming where developers interact with code in a conceptual, AI-assisted manner, rather than manually writing each line.

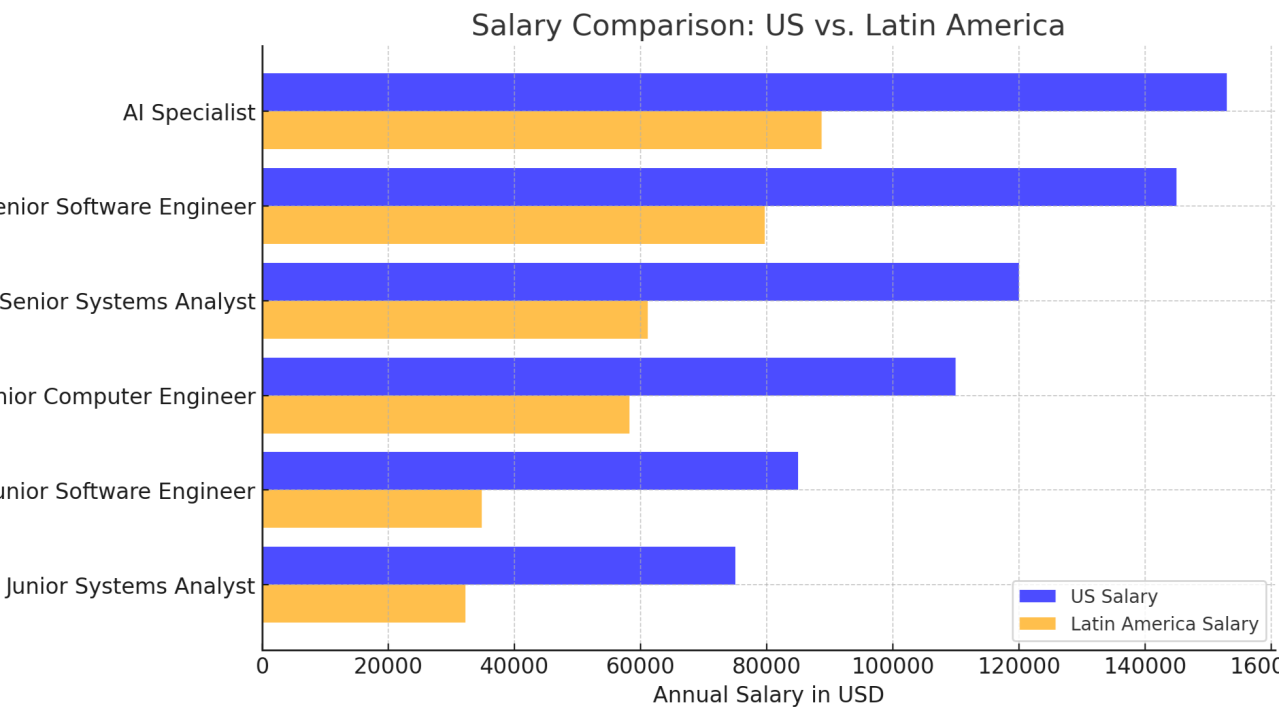

The shortage of technology professionals, including programmers, engineers, and analysts, has intensified in the United States due to the increasing demand for specialized skills and a limited supply of qualified talent.

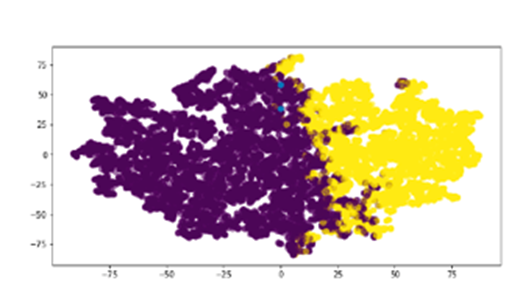

The need arise from the need to streamline the analysis of exams performed on patients who may present detectable pathologies through imaging studies, assisting the physician in making a faster and more accurate diagnosis.

Researchers at Sakana.AI, a Tokyo-based company, have worked on developing a large language model (LLM) designed specifically for scientific research.